The following articles are contained below:-

A

Brief History of Quantum Theory 1925 – Y2K

Fermions and Bosons;

The Janus of Quantum Theory

In

Search of Perfect Symmetry

Quantum

Loop Gravity

"GOD DOES NOT PLAY DICE WITH THE UNIVERSE"

A. Einstein

"EINSTEIN, DON'T TELL GOD WHAT TO DO"

N. Bohr

A Brief

History of Quantum Theory 1925 – Y2K

Part I Inward Bound --- Sub atomic theory

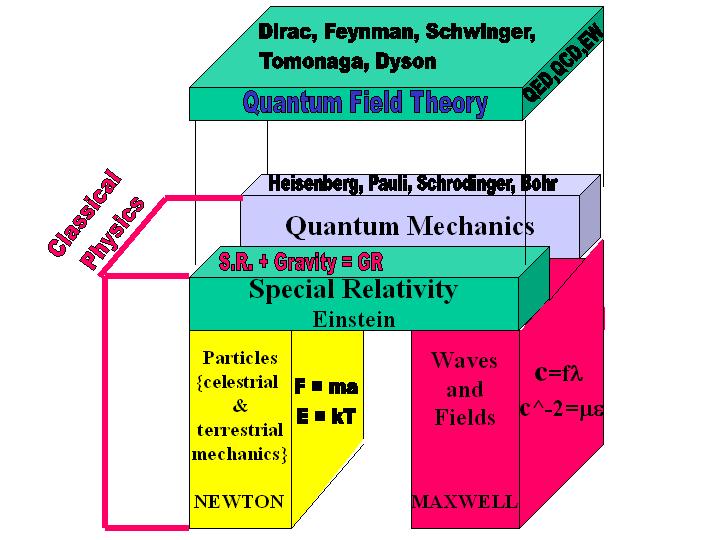

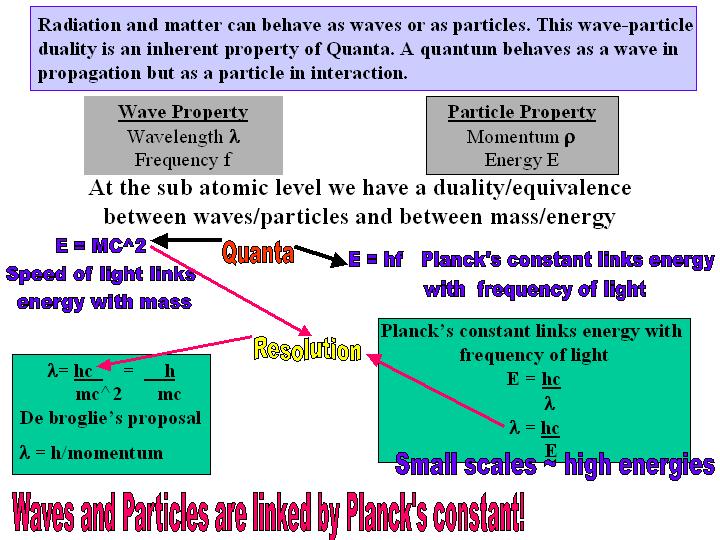

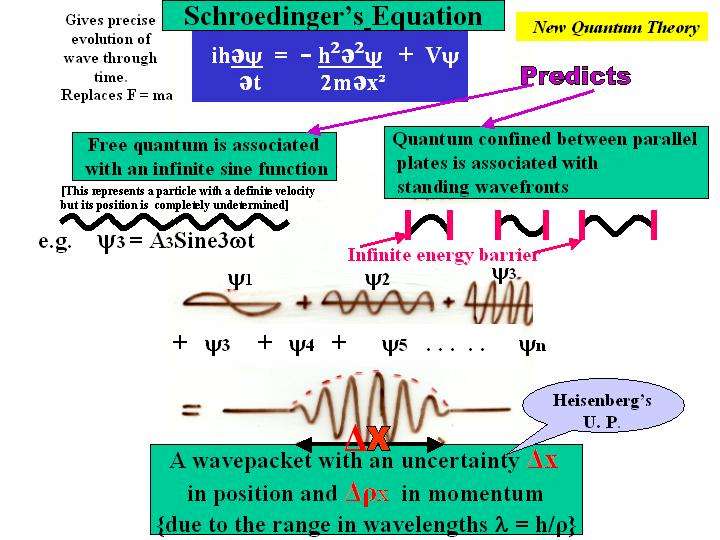

There are 4 formulations of modern quantum theory (QT). Historically

it was Heisenberg’s matrix mechanics, which was first to be published.

The non-commutative nature of matrix multiplication is responsible for

the appearance of his uncertainty principle. Schrodinger’s wave equation

was quick to follow, having delayed publication due to his inability to

produce a fully relativistic version. The nature of the wavepacket that

describes a quantum entity, inherently produces the uncertainty in its

position/momentum (the latter being related to the constituent wavelengths

of the packet via de Broglie’s equation). Dirac showed the equivalence

of both these methods and introduced his own formulation in terms of vectors

in Hilbert space, using his <bra ket> notation. [Hilbert Space was

originally utilized by von Neumann in his attempt to produce a universal

axiomatic formulation for quantum theory]. Dirac was also the first to

formulate a relativistic quantum theory, a union which demanded a field

theory and necessitated the introduction of intrinsic spin and anti-matter!

Finally there is Feynman’s path integral (sum over histories) technique

which is used in the more advanced treatment of interactions of ‘particles’

with their ‘fields’ (whose quanta are fermions and bosons respectively).

If we consider a projectile such as a football, in its motion from the

ground to its position of maximum height, its path is not that of the

shortest route (i.e. a straight line) but rather (half) a parabolic curve.

What is minimised however is its action i.e. it’s (kinetic –

potential) energy integrated over time, (this is known as the principle

of least action). The fact that this so-called Lagrangian formulation

is symmetric (unaltered) over local translations of space and time, yields

the conservation of momentum and energy respectively. According to classical

physics, objects move in a force field, so as to minimize this action

and in just one page of his book, Dirac showed that the sum of the contributions

of the various paths that a quanta can take (each with its own probability

amplitude), reduces to that of the classical stationary action, in the

limit that Planck’s constant ‘h’ tends to zero. In fact

it is due to the small numerical value of h (~ 10^-34 Js) that quantum

effects are not readily observed except at the atomic scale. In other

words the principle of least action is due to quantum mechanics and the

smallness of ‘h’. Feynman later elaborated this in his sum over

histories technique, in which we associate a probability amplitude that

is determined by the action of a particular path, and sum up all of these

to find the overall probability of a quantum moving between two states

(positions). This is schematically shown in the Feynman diagrams but it

is important to realise, that the actual calculations involve the sum

over all the possible paths and not just the particular lines shown in

any given representative diagram.

To first order effects, this technique just retrieves Schrodinger’s

equation. However the power of this method is that we can utilize higher

order perturbations to include the effect of movement (e.g. of an electron),

in a field (electromagnetism), in a way which can also treat the field

in a quantum mechanical manner (involving a photon), thus producing quantum

electrodynamics (QED). [The concept of a force is therefore subsumed by

that of an interaction and in the case of the weak interaction, there

is no force manifested.] The path integral is also important because it

makes quantum theories automatically consistent with special relativity,

providing that the Lagrangian itself is already Lorentz covariant (i.e.

it is not dependent on any one co-ordinate system of 4 dimensional space-time).

Unfortunately these techniques are arduous, as indicated by the fact that

it took 2 teams of mathematicians 4 years to work out one value (it took

one year to discover an error in the calculation)! It did however result

in 3 Nobel prizes but as Weinberg once protested "how can we make

any money out of this".

These perturbation techniques work by first using known exact solutions

(which puts us in the right ball park), and then proceed by making more

fine-tuned approximations. However at each progressively higher level

of energy scale (corresponding to a greater level of complexity of Feynman

diagrams), we need to renormalise our answers to ensure that all

our probabilities again add up to unity. It is a bit like a fractal, in

which approximate measurements of the perimeter of such a shape yields

a different answer, depending on the magnification (energy scale) at which

we carry out the measurement. Hence if left 'unregulated' these perturbation

techniques are inherently divergent, producing infinities in the self

energy of an electron. Renormalization is therefore a necessary technique

of self consistent subtraction, which allows the theoretical values for

the mass, charge and magnetic moment of an electron, to be in agreement

with the experimental values (in particular those of the Lamb shift, the

Lande factor g and the hyperfine anomaly). Much of the work up until 1950

was focused on QED, i.e. the production of a consistent quantum theory

of the interaction of charged particles (electrons) with an electromagnetic

field. Initial steps were undertaken by Dirac with his method of second

quanization (an application of his beloved transformation theory), in

which an electromagnetic field is represented as an assembly of of quanta

(photons). This introduced creation and annihilation operators that obeyed

certain commutational relations which ensured that the particles being

described satisfied Bose-Einstein statistics. This field was not however

relativistic (as is Maxwell's field description) but the method was extended

to classical matter wave fields by Jordan, who introducing the

correct anti-commutational rules, so that the particles (electrons), obeyed

Fermi-Dirac statistics (but this again was not Lorentz covariant). [Jordan

was the first to suggest that the commutational rules that effected the

transition from the classical to the quantum description of a system of

particles, also be applied to a system with an infinite number

of degrees of freedom, that is to a field system.] Henceforth a

quantum field could be interpreted as expressing the probability

of finding a given distribution of particles states throughout space and

time or alternatively an assembly of quanta could be described as a such

a field, thus both the wave and particle nature are incorporated in the

notion of a quantum field! Indeed the other great achievement of Dirac,

was in producing the correct formulation for making quantum theory (QT)

consistent with special relativity (SR) which also demonstrated the necessity

of a field theory. The famous equation that bears his name, not only predicted

the existence of antimatter and it also introduced the mathematical structure

of spinor theory.

Following on from all this endeavor, Heisenburg and Pauli developed a

general method for quantizing (Maxwell's) fields, using the Lagrangian

description and canonical variables (field potential and its conjugate

momenta) but they encountered infinities when they tried to produce a

complete picture of quantum electrodynamics (i.e. QED). The next major

step was the independent work of Tomonaga and Schwinger who described

the interaction of an electron matter field with the electromagnetic field

but due to the effects such as vacuum polarization and the self energy

of the electron, it became necessary to absorb these infinities at each

level of the calculation. Schwinger achieved this by employing Dirac's

contact transformation to eliminate such virtual effects in the calculation.

A different approach was instigated by Feynman, who followed Dirac's philosophy

in regarding electromagnetism as a quantized field but treated

the electron as a quantum particle. In his path integral approach

(which was also inspired by Dirac's work on the usefulness of the Lagrangian

in QT), Feynman applied a relativistic cutoff field (which was equivalent

to an auxiliary field) to cancel the infinite contributions due to the

real particle of the original field. [The auxiliary masses of the cutoff

are used more as a mathematical parameter, which finally tends to infinity

and are nonobservable in principle]. Dirac however had strong reservations

about any theory which neglects infinities instead of infinitesimals (as

does calculus). However renormalization later became a guiding principle

in the formation of the electroweak theory. Finally Dyson demonstrated

the equivalence of Feynman's and Schwinger's method and did much to show

that renormalization was consistent to all levels. [Schwingers method

involved field operators and the calculations were very hard to follow

and indeed had not been pursued beyond a second order, whereas Feynmans

method had been more user friendly]. Dyson managed to recast the Schwinger

formulation so that it displays all the advantages of the Feynman theory

but unlike the path integral method, it used an electon-positron field

and incorporated a term in the Hamiltonian, which described the interaction

of this matter field with the Maxwell field.

Although such approximation techniques are tedious it would be desirable

to utilize Feynmans method for the other 3 interactions (i.e. the weak

and strong nuclear forces and gravity). However unlike electromagnetism,

where the strength with which charged particles couple to their field

is small ~ 1/137, such perturbation techniques are more difficult with

the strong interaction (Quantum Chromodynamics) and gravity has defied

all attempts, since the calculations produce infinities that refuse to

be renormalised. [Gluons interact with themselves as well as with their

quarks, while gravitons, having energy and hence mass, also ‘gravitate’.

Also, gravity is described as a curvature in space-time in general relativity

(GR) – hence a graviton implies a quantised space-time!!!] Interestingly

it is Black holes which offer clues as to how to proceed with a theory

of quantum gravity. The event horizon of such exotic entities can combine

ideas from GR, thermodynamics and QT! The area of the event horizon can

be related to temperature and hence entropy and has given rise to the

development of what is known as the 'holographic principle'. This allows

an explanation of what happens when the information (negentropy) stored

in a (3-dimensional) assembly disappears into a black hole. Eventually

due to Hawking radiation, the material but not the information, is restored

to the universe at large, apparently in violation of the second law of

thermodynamics. This dilemma is avoided if we assume that the information

is stored in the 2-dimensional surface of the event horizon in the form

of string segments (c.f. 'strings' below). Each minute segment

of a string measuring 10E-35 centimeters across, functions as a bit. Hence

the surface of a black hole is able to store 3-D information and is therefore

analogous to a hologram.

On another front, it would also be desirable to collect all the particles

into a multiplet that becomes unified under one interaction, so that the

laws of physics make no distinction between an electron, neutrino or a

quark. Returning to the Lagrangian, certain local gauge (internal)

symmetries, acting upon such a multiple particle state, implies the conservation

of quantum properties but require the introduction of so-called gauge**

fields, in order to ensure the invariance of the Lagrangian under such

(local) transformations. [This involves Noether's theorem, in which symmetries

are related to conservation laws; in particular local gauge symmetries

demand the addition of a gauge field, in order that certain quantum values

are conserved. It is this gauge field that is responsible for the observed

fundamental interaction between the 'particles']. The interactions can

therefore be viewed on a quantum level as gauge (Yang-Mills) fields, that

must be introduced in order to ensure that the Lagrangian of (unified)

particle states are symmetric under localized‘internal’ transformation.

[The quanta of these gauge fields, being the photon, gluon and W, Z particles

of the electromagnetic, strong and weak interactions respectively which

are adequately explained by the 'standard model'. [Technicolour is a provisional

theory which introduces a new interaction analogous to the colour force

that binds quarks and attempts to go beyond the standard model but requires

new generations of particles.] These are mathematically described by specific

groups of transformations. Whereas electromagnetism and the weak

nuclear interaction are successfully unified under a gauge group known

as U(1)*SU(2), the strong nuclear force (Quantum Chromodynamics- QCD)

is described by an SU(3) symmetry. We therefore need to find a master

symmetry group, which subsumes these smaller symmetry gauge groups that

are associated with some of these interactions. Regarding such superunification,

it is not possible to satisfactorily incorporate gravity (which is governed

by the non-compact Poincare group) in what is known as a Unitary representation

(that dictates the other 3 quantum interactions), unless one resorts to

supernumbers, which combines both fermions and bosons via supersymmetry.

[Specifically there is a no-go theorem which states that a group that

nontrivially combines both the Lorentz group and a compact Lie

group cannot have a finite dimensional, unitary representation]. This

concept was originally invoked in the early study of string theory but

although this has received a recent surge in popularity, supersymmetry

itself does not require a string formulation. [Such a Lagrangian, is that

associated with the surface being swept out by a string,

rather than that of a point like particle which, as it moves through

time sweeps out a curve.]

Indeed even without quantum theory, gravity can be regarded in terms

of a local symmetry applied to special relativity

and this puts gravity on an equal footing with acceleration, (where velocity

and therefore the space-time frame of an observer, varies locally with

its 4 dimensional ‘position’). We can therefore apply classical

laws of physics to an accelerating frame of reference, if we invoke a

gravitational field or alternatively a gravitational field can be eliminated

locally by applying an accelerated frame of reference and hence the correct

metric (curvature) of space-time that goes with it. A gravitational field

therefore needs to be invoked, in order to allow the global space-time

transformations of special relativity to be locally symmetric,

thereby extending special relativity to that of general relativity. [In

this respect gravity is said to be locally equivalent to acceleration.]

Likewise the unified electroweak field needs to be invoked, if we are

to allow a local gauge transformation to act symmetrically on a family

of leptons or quarks. However whereas Feynman’s path integral technique

is applicable to these interacting particles, when considering gravity,

it is not possible to use such accurate quantum techniques, since the

calculations blow up into infinity and refuse to be renormalised. It is

therefore hoped that by extending unification to include all 4 interactions

some of these infinities will cancel out without the need for messy renormalisation

tricks.

So from the simple description of nature in terms of classical particles

and fields, we have been forced to accept their description in terms of

quantum theory. Group symmetry allows us a way of relating a collection

of these particles, with the corresponding fields with which they interact

with one another. Whereas Newton's gravitational law united falling bodies

on Earth with celestial motion, Einstein unified space, time and gravity,

while Maxwell succeeded in unifying Magnetism with Electricity. Gauge

symmetry provides a way of combining both electromagnetism

and the weak interaction and allows

a way of extending this description so as to include the strong

interaction (the so called standard model). However this effort has not

achieved the status of a complete unification, since we have not yet discovered

a single group, that encompasses all three

interactions.(i.e. a Grand Unified Theory). Supersymmetry hopes to put

the quanta of both ‘particle’ and ‘field’(i.e. fermions

and bosons), on an equal footing, while the latest development -- string

theory, hopes to explain what symmetries are allowed, which in turn determine

the conservation laws of physics. [Whereas bosonic fields behave in a

conventional commuting manner, the fermionic field is a spinor representation

of the Lorentz group and are consequently anticommuting!]. As an extra

bonus, putting fermions and bosons in the same super multiparticle state,

necessitates the introduction of a gravitational field (i.e. local supersymmetric

transformations invoke a field that produces the localised space-time

translations that is indicative of gravity). The price that we have to

pay for such a ‘simplification’ of physical phenomena into a

unified framework, is ironically, a succession of layers of abstract concepts,

together with their relevant mathematical structures. Hence from tangible

atoms, we move by one level of abstraction to invisible fields and particles.

A second level of abstraction takes us from fields and particles to the

(gauge) symmetry-groups by which fields and particles are related. The

third level of abstraction, is the interpretation of (super) symmetry-groups

in terms of states in higher (10) dimensional space time, since it is

the manner in which the space is compactified which determines the symmetries

that are permitted. The fourth level is the world of superstrings by whose

dynamic behavior the states are defined. Finally we arrive at M-theory,

in which even the strings are regarded as just one of many possible p-branes

that can exist in a 11-Dimensional space-time. M-Theory consequently contains

many varied multiverses, of which our own particular universe permits

such rich laws of physics, that it allows our very existence so that we

can perceive it!

* * * * * * * * * * * * * * * * * * * * * *

Part II Outward Bound ----- Cosmology

Quantum theory, has been used to apply a wavefunction to the universe

as a whole (viz. The Wheeler De Witt equation), even though we are lacking

a quantum theory of gravity. Such quantum cosmology has been used by Hawking

et al, in order to help explain the ripples in the big bang radiation

detected by COBE and to possibly explain how quantum wavefunctions can

undergo decoherence to produce a classically observable universe.

In the absence of a quantum theory of gravity, provisional

Grand Unified Theories (GUTs) have provided an understanding as to

why the universe is so smooth (homogeneous) and is so close to the critical

density that would eventually halt its expansion, (referred to as the

horizon and flatness problem respectively). In GUTs, the electroweak theory

and the strong force QCD, are unified into one interaction and the underlying

laws of physics make no distinction between an electron, neutrino or a

quark. [In math speak, the SU(3)*SU(2)*U(1) gauge symmetry of the 'standard

model' are unified under one larger symmetry group]. This symmetry between

these particles (and their interactions) only becomes broken into the

separate interactions that we observe around us today by a mechanism,

which involves the so-called Grand Unified Higgs fields (different from

the electroweak higgs field).. The spontaneous symmetry breaking (SSB)

of the Higgs mechanism gives the particles their distinctive properties

and their interactions different coupling strengths and ranges. In other

words the distinction between strong, weak and electromagnetic interactions

is caused by the way that their force carrying particles (i.e. gluons,W,Z

and photons) interact with the different Higgs particles.

The simplest GUT requires 24 Higgs fields but 2 Higgs fields can be represented

as a 3 dimensional graph, which resembles a sombrero hat! It has the unusual

property that its zero value has a (local) maximum energy density (centre

of hat), while it achieves zero energy density at the rim, where the Higgs

field is not at the origin (i.e. it is non zero), and it is here which

corresponds to the SSB condition. When this SSB occurred in the very early

universe, the Higgs fields aligns itself in a particular direction (analogous

to a ball rolling down the sombrero to the brim in a spontaneously chosen

direction) and the direction would be randomly chosen in different parts

of the universe, which are sufficiently far apart. Such a mismatch in

directions would correspond to a large number of magnetic monopoles being

formed, which unlike electric monopoles (e.g. electrons) are not observed

in our universe.[North and south poles occur together and are not observed

separately, although Maxwell’s equations of electromagnetism would

be more symmetric if we included such sources.]

This is where Inflation(cf. addendum below) comes into

play, since it allows us to alter the graph in a way that produces a dip

(or a flattened top) in the centre of the ‘sombrero’ which corresponds

to a false vacuum. This false vacuum allows the alignment of the Higgs

fields that are responsible for SSB, to grow gradually rather than produce

a more chaotic distribution, which would result in the production of too

many magnetic monopoles. As the name suggests, the false vacuum has peculiar

properties, but the important point is that although it has a very high

positive energy density, it has a far (~ 3 times) higher negative pressure

and therefore corresponds to a repulsive force according to Einstein’s

general theory of relativity. [Note that it is not the uniform negative

pressure that drives the expansion, since only pressure differences result

in forces, but rather that pressure like mass, gives rise to gravitational

forces and negative pressure gives rise to a negative repulsive gravity

that is associated with a cosmological constant]. Hence when we have a

SSB of a Higgs field coupled to gravity we generates a constant term,

which corresponds an increase in the energy density of the vacuum which

cannot be ignored when dealing with GR! Einstein originally introduced

such a cosmological constant (lambda)** into

his early model of the universe, which was then believed to be static,

and hence the positive gravitational attraction of all the matter of the

universe had to be balanced by a repulsive term. The introduction of such

a term into the era when GUTs prevail, does however produce an exponentially

inflating universe (the de Sitter solution), rather than the one we

observe today that is typical of an initial explosion of matter, which

is experiencing the decelerating effects of gravity. Incidentally the

cosmological constant in the universe today is known to be close to zero,

although quantum calculations involving GUTs produce an embarrassingly

large value for lambda.

Recent observations of supernova in distant galaxy clusters indicate

that it has a small positive value, which consequently produces a repulsive

5th force, whose effects only become significant over large

volumes of space. If these observations are indeed valid, it shows that

the universe is undergoing acceleration and will never halt its expansion.

The good news is that this explains the flatness of the universe without

needing to invoke such a large value of the 'missing mass'. The larger

the cosmological constant, the smaller he amount of positive mass/energy

that is needed to produce a flat but not closed universe (Omega =1). Some

modern notions have tried to do away with the somewhat ad hoc

inflationary theory by allowing the value of lambda to decrease

with time. The early universe thus underwent rapid expansion due to the

large initial lambda value, which itself is not stable but decreases with

time. This theory allows for a fine tuning, and as the universe expands

and lambda decreases, matter is created in just the right amounts to keep

the density of the universe sufficient for it to maintain its flat value.

The problem with this theory is that it requires the universal speed of

light to be coupled to lambda and decrease with time!! Such an iconoclastic

notion as the speed of light being reduced as the universe expands, will

require a good deal of empirical evidence that is not easy to produce.(C.f.**

below). An alternative approach is to introduce another quantum field

called Quintessence, which is a basic (more adaptive) property of 'empty'

space that may be able to account for the 5th force of repulsion.

So historically inflation was used to explain the dearth of magnetic

monopoles. However by incorporating such a brief but rapid expansion

in the early universe, we can also allow for our observable universe

to have been causally connected in the past and these effects can

explain the flatness and the horizon problem respectively. The false vacuums

allows the Higgs fields to grow in alignment without too much chaos, therefore

avoiding the creation of too many magnetic monopoles, before they quickly

decay into the true vacuum (via quantum funneling) that we observe today.

Unfortunately, calculations involving the growth and percolation of these

false vacuums have encountered difficulties and several refined variations

of inflation theory have had to be introduced. In addition, if we resort

to string theory, it needs to be embedded in 26 dimensions that became

compactified during the early SSB period, when the false vacuum collapsed

(as if things aren’t complicated enough).

It therefore becomes necessary to study the actual space in which the

field equations exist. Whereas the mathematics of group theory was required

by the physicists of the 60’s, topology has also become necessary

for those studying how higher dimensional universes become curled up to

produce our observed 4 dimensions of space-time. What’s known as

the cohomolgy properties of these compactified spaces, are related to

the number of generations (viz 3) of quarks and leptons that can

occur, as well as the symmetries that are permitted (this number

equals half the Euler characteristic of the manifold). This prompts a

small digression, in that Penrose employs a sheaf version of cohomology

in his twistor** theory. His philosophy

is that both quantum theory and general relativity need to be replaced

by a single and more fundamental theory, one which would replace the space-time

description of physics, with that of a space of complex numbers associated

with spinor mathematics – projective twistor space. Spacetime

and twister space are related by a correspondence that represents light

rays in spacetime as points in twister space. A point in spacetime is

then represented by the set of light rays that pass through it (each having

a 'null direction'). Thus a point in spacetime becomes a Reimann sphere

(a well known method of steriographically representing a complex plane)

in twister space and the effect of Lorentz transformations produce congruences

that twist (hence the name). In this way we consider spacetime to be a

secondary concept and regard twister space (initially the space of light

rays) to be a more fundamental space.

The actual twister coordinate, is constructed out of a pair of two-spinors

which obey certain relationships to each other, that are dictated by the

corresponding spacetime coordinate (the spinors satisfy a specific twistor

differential equation). Now if we think of light rays as photon histories,

we also need to take into account their energy and also its helicity which

can be either right or left handed or zero. The multiplication of a twister

by its conjugate yields twice the value of its helicy or twist, which

can therefore be either positive , negative or zero. [This then leads

to the formation of a projected twistor space, in which the full

twistor space is projected into a space which is divided into the three

regions according to whether their helicy is positive, negative or zero].Twister

theory is a conformal (i.e. scale invariant ) theory, in which physical

laws that are usually written in the framework of space-time, are expressed

in what may turn out to be a more fundamental and revealing manifold,

which could therefore yield a more fundamental understanding of physics.

A massless field is then defined by a contour integral in twistor space

and these integrals are determined once we know about the singularities

of a general twistor function in twistor space (in the case of electric

or magnetic fields these singularities look like charges or sources where

field lines begin or end). In other words, the differential equations

that describe fields in space time have been reduced to simple functions

in the geometry of complex spaces, viz. projective twistor space. The

field is given by the contour integral around this function which in turn

is determined by the nature of the singularity of this function, (hence

the use of sheaf cohomology in dealing with the analysis of these regions).

For massless fields, it turns out that the helicity of the quantum particle

H is related to the homogeneity of the twister field T by the simple relationship;

H=h/2 (-2-(T)), so if we have a photon of spin +1 it is necessary to write

down a twister function of general power of 4 while for a -1 helicity

photon the homogeneity must be 0. (for a H=+2 graviton, T=6). We therefore

have a fundamental distinction between left and right handed light i.e.

chiral asymmetry is a fundamental property of twister theory!

Producing a quantum theory of gravity is particularly difficult for

several reasons. Firstly quantum gravity implies quantised space time!!!

Secondly gravity is intrinsically so weak it has not yet been possible

to detect classical gravitational waves, let alone its graviton (it is

also difficult to combine the two realms, since QT deals with very small

scale phenomena, while GR deals with large masses). Thirdly, quantum field

theories are written as spinor fields, to which, the Riemannian techniques

of GR not applicable Finally regarding unification, the GR group structure

is non compact, while QT group is Unitary and there is a theorem which

states that there is no finite unitary representation of a non compact

group (this is why we have to resort to supernumbers). At first glance

GR and QT look very differently mathematically, as one deals with space-time

and direct observables while the other with Hilbert space and operators.

One approach to synthesizing the two and providing a quantum theory of

gravity, involves Topological Quantum Field Theories (TQFT). [A topologist

is sometimes defined as a mathematician who cannot tell the difference

between a tea cup and a doughnut, since they are diffeomorphic to each

other, both having a genus of 1 ]. Quantum states are given topologies

and cobordism allows a description of how quantum (gravity) states evolve

i.e. TQFT maps structures in differential topology to corresponding structures

in quantum theory. The state of the universe can only change when the

topology of space itself changes and TQFT does not therefore presume

a given fixed topology for space-time. Quantum operators are therefore

related to cobordism and n-category theory (i.e. algebra of n-dimensions)

is a useful advance in understanding the cobordism theory of TQFT.

There have been two reactions to the apparent inconsistency of quantum

theories with the necessary background-independence of general relativity.

The first is that the geometric interpretation of GR is not fundamental,

but just an emergent quality of some background-dependent theory. The

opposing view is that background-independence is fundamental, and quantum

mechanics needs to be generalized to settings where there is no a-priori

specified space-time. This geometric point of view is the one expounded

in TQFT. In recent years, progress has been rapid on both fronts, leading

ultimately to String Theory (which is not background independent)

and Loop Quantum Gravity (LQG), which is background independent

and also incorporates the diffeomorphic invariance of GR. Topological

quantum field theory provided an example of background-independent quantum

theory, but with no local degrees of freedom, and only finitely

many degrees of freedom globally. This is inadequate to describe gravity,

since even in the vacuum, the metric has local degrees of freedom according

to general relativity (e.g. those due to the propagation of gravity waves

in empty space).

Loop Quantum Gravity is a nonperturbative quantization of 3-space geometry,

with quantized area and volume operators. In LQG, the fabric of space-time

is a foamy network of interacting loops mathematically described by spin

networks (an evolving spin network is termed a spin foam; spin foams are

to operators what spin networks are to states/bases). These loops are

about 10E-35 meters in size, called the Planck scale. In previous lattice

theories the field is represented by quantised tubes/strings of flux which

only exist on the edges of the lattice and the field strength is given

by the value of integrating around a closed loop. In LQG space

and time are relational! As in GR where there are many ways of slicing

a section of space time, there are many ways of slicing an evolving spin

network - thus there are no things only processes! [A spin network is

a graph with edges labeled by representations of some group and vertices

labeled by intertwining operators. Thanks in part to the introduction

of spin network techniques, we now have a mathematically rigorous and

intuitively compelling picture of the kinematical aspects of loop quantum

gravity.] The loops knot together forming edges, surfaces, and vertices,

much as do soap bubbles joined together. In other words, space-time itself

is quantized. Any attempt to divide a loop would, if successful, cause

it to divide into two loops each with the original size. In LQG, spin

networks represent the quantum states of the geometry of relative space-time.

Looked at another way, Einstein's theory of general relativity is a classical

approximation of a quantized geometry.

Historically, Regge calculus was the first attempt to quantize gravity,

by dividing up space into small (flat) 3D tetrahedral simplexes, in which

the curvature is concentrated along their boundaries. From this Hilbert

space of quantum tetrahedron, it was intended to produce 'Feynman propagators'

for gravity and recover Einstein's field equation in the macroscopic domain

of space-time. Each of the edges is associated a spin j and in one type

of approach, the exponential of the action in such a configuration, is

a suitable product of the the 6j symbols associated to each of the 6 edges

of the 3-simplexes and a partition function is obtained by taking the

sum of this products over the possible associations of the spin to the

edges. Remember that angular momentum is a quantum (bi)vector and is therefore

subject to Heisenberg' Uncertainty Principle. [In considering a Lorentzian

(as opposed to a 3-D) spin network we employ a 4-simplex in which there

are 4 tetrahedra, 5 vertices, 10 triangles and 10J symbols].

Next consider Wilson loops, which have been used to analyze fields in

QCD by means of applying a lattice structure and integrating along closed

paths. They are functions on the space of connections; at a lattice

point the Wilson loop is just the trace of holonomy around the loop on

the lattice, taken in some representation of the holonomic group of the

gauge field. [This philosophy originated from considering the vacuum as

being like the discrete lines of (magnetic) flux that is exhibited by

superconductors.] A 'connection' on the lattice is simply an assignment

of an element of a gauge group to each edge of the graph, representing

the effect of a parallel transport along the edge. [The holonomy around

such a gauge field, is a measure of the field strength, which in turn

determines the value of the Feynman path integral]. Each edge of this

lattice is assigned a gauge group element that represents the (holonomic)

connection and the vertex is also assigned a group element that represents

a gauge transformation. From this a quotient space is formed (i.e. the

space of connections modulo gauge transformations), and by mapping these

onto suitable(complex) irreducible spin representations, we obtain a suitable

way of producing a Spin Network. [Such spin network edges represent

quantized flux lines of the field]. Holonomy is a natural variable in

a Yang-Mills gauge theory, in which the relevant variables do not refer

to what happened at a point but rather refers to the relation between

different points connected by a line (curve). Hence to create a framework

for quantum GR we introduce a connection A and a momentum conjugate E,

from which we can produce a spin network together with area and volume

operators, which act upon space so as to quantise it. [The flux through

a surface is represented by area operators for a spin network, acting

on a surface described by a spin network basis]. In LQG, holonomy

becomes a quantum operator that creates loop states. Over a continuous

background, Wilson loop states are far too big to produce a basis of Hilbert

space of a QFT. However loop states are not to singular or to many when

formulated in a background inependent theory, where spacetime is itself

formed by loop states, since the position of these states is relative

only with respect to other loops and not to the backlground. Therefore

the size of the space is dramatically reduced by this diffeomorphic invariance

(a feature of GR itself).

A finite linear combination of loop states are defined precisely as spin

network states of a Yang-Mills theory. Spin networks are gauge invariant

and by taking suitable sums of tensor products, provide an orthonormal

basis for LQG. Penrose had earlier introduced Spin Networks, in which

the edges were labeled by an irreducible representation of a SU(2) Lie

gauge group (characterized only by its dimension d =2j+1, where j is the

quantum spin number) and the vertices with intertwining operators

(tensors that transform incoming states into the outgoing states) and

it was found that such a combinatorial formalism was preferable since

it produced a relational theory. Spin networks have edges which are associated

with a spin j quantum of action and the number of edges intersecting at

a node determines its area, while the volume is determined by the number

of nodes in a given region. A strict connection exists between quantum

tetrahedra and 4-valent vertices of SU(2) spin networks. The 4 faces

of a tetrahedron are associated with 4 irreducible representations of

SU(2), which are represented by a perpendicular line, the 4 of which meet

at a central node of the tetrahedron (there are actually bivectors associated

with each face, in keeping with constraints of GR relating to the Ricci

curvature tensor --- quantizing the bivectors/tetrahedra amounts to labeling

each face with a pair of spinors). We therefore obtain a 4-valent (colour

coded) spin network (each line of which represents a quantized unit of

action, while the nodes behave as area operators), which can exhibit properties

that are gauge invariant. The quantum bivectors allow us to construct

area and volume operators which act upon the spin network basis to

produce a discrete spectrum which has units of Planck length squared and

cubed respectively (in the case of the area operator, the eigenvalues

are 1/2sqrt (j(j+1)) and as in keeping with quantum theory we would expect

these to correspond to physical observable i.e. we have a quantized space!

These spin networks (formed from the above mentioned quotient gauge space),

do not refer to a specific space background and we can reproduce Wilson

loop calculation to imitate a quantum theory of gravity, which is relational,

as in the spirit of GR. So, since spin networks form a convenient basis

of kinematical states, they have largely replaced collections of loops

as our basic model for 'quantum 3-geometries'. Now in order to better

understand the dynamical aspects of quantum gravity, we would also like

a model for 'quantum 4-geometries'. In other words, we want a truly quantum-mechanical

description of the geometry of spacetime. Recently the notion of 'spin

foam' has emerged as an interesting candidate; so whereas spin networks

provide a language for describing the quantum geometry of space, a spin

foam attempts to extend this language to describe the quantum geometry

of space-time. A spin foam is a 2-dimensional cell complex with faces

labeled by representations and edges labeled by intertwining operators;

generically, any slice of a spin foam gives a spin network. We calculate

the amplitude for any spin foam, as a product of the face and edge amplitudes

(which equate to propagators) and the vertex amplitudes (which equate

to intersection amplitudes). [Abstractly, a Feynman diagram can be thought

of as a graph with edges 'labelled' by a group representation and vertices

labelled by intertwining operators. Feynman diagrams are 1D because they

describe particle histories, while spin foams are 2D because in LQG, the

gravitational field is described not in terms of point particles but as

1D spin networks. Feynman computes probability in terms of probability

amplitudes for edges and vertices whereas spin foams compute probability

amplitudes as a product of faces, edges, and vertices amplitudes. Like

Feynman diagrams spin foams serve as a basis for quantum histories.]

Although QLG has been successful in predicting Hawking radiation and

Black Hole entropy, it is restricted to the domain of quantum gravity

and as yet does not offer any import on the other fundamental interactions

or the possibility of unification. Unlike string theory it does however

offer testable predictions, such as the variation of the speed of light

at different energies. The spin foam which makes up the fabric of space-time

predicts a varying refraction coefficient depending on the frequency and

hence energy of the photon. It is therefore hoped that by studying gamma

ray bursts from the most remote regions of the universe, this small dispersion

in the arrival times of the radiation can be observed (other avenues of

research also point to theories involving a variable speed of light).

Some researchers believe that even the success of string theory can be

explained in terms of discrete units of space that become evident on the

Planck scale (which being ~ 10 E -35m, is much smaller than that of the

compactified dimensions of superstring theory). Also both theories allude

to a version of the Holographic**

principle (where a bulk theory with gravity in n dimensions, is equivalent

to a boundary theory in n-1 dimensions without gravity, cf. Maldacina

conjecture below), in which entities such as black hole contain all their

information in their Event Horizons - one bit for every 4 Planck areas.

This arises since black holes emit Hawking radiation and therefore its

mass is related to a thermodynamic temperature, hence the entropy of a

black hole is proportional to its surface area while information is negentropy.

However LQG emphasize the necessity to have a relational theory

in which space and time are dynamic rather than fixed and the primary

concept is that of processes by which information is conveyed from one

part of the world to another. Hence the area of any surface in (QLG) space

is nothing but the capacity of that surface as a channel of information

and so the world can be construed as a network of relationships. This

avenue of approach has lead to the study of a relational logic called

Topos theory and non commutative geometry, in which it is impossible

to determine enough information to locate a point (a point is then described

by an appropriate matrix) but it can support a description of particles

and fields evolving in time)

Hawking on the other hand believes that QT of supergravity is the way

forward, although he does utilize an imaginary time coordinate. Time has

been a problem for philosophers and more recently, physicists have started

to take the view that our concept of time may be responsible for some

of the intractable problems that face QT and GR (particularly relating

to the collapse of the wavefunction but also a QT of gravity implies quantized

space-time). Indeed J. Barbour believes that time does not exist but is

merely a mental construct. He has a tentative theory which involves a

stationary cosmological wave functions (akin to that used in the Wheeler-

De Witt equation) acting upon a configuration space of the whole universe

(known as Platonia). Einstein once said that space and time are modes

by which we think rather than conditions in which we live, but as Hume

would say how can something that exists as a series of states (the Nows)

be aware of itself as a series? Of course in the cosmic wavefunction,

we must also include the human mind but I am still not convinced that

this will be sufficient to completely justify Barbour’s claim of

‘The End of Time’ in physics.

Returning to string theory, five versions originally evolved but more

recently, a ‘second revolution’ has subjugated these into an

11 dimensional formulation known as M-theory which also accommodates supergravity!

(M stands for mystery, as very little is known about the underlying nature

of this 21st Century theory). As T.S. Eliot once wrote, "trust

the tale not the teller", hence even if the models that are proposed

appear incredulous, the mathematics may hold real value.

To summarise therefore, inflation theory is good example of where studies

in sub-atomic physics are relevant to those of cosmology. When we study

the quantum mechanics of GUT and add on the effects of general relativity

as an appendage, we find that it necessary to include a non-zero value

for the cosmological constant for the early universe, when strong, weak

and electromagnetic interactions were unified. This produces an exponentially

inflating universe during this early phase transition, which overcomes

the horizon, and flatness problems. Its main raison d’ętre

was however to explain the scarcity of magnetic monopoles. These would

otherwise be more prevalent if there had been a more chaotic Higgs mechanism,

rather than the inflationary version of the SSB that is responsible for

the distinct interactions that we observe today. The richness of these

physical laws is nowhere more evident, than in the creation of our own

planet Earth and in particular, the evolution of the human mind, which

allows contemplation of the very laws that are responsible for its existence.

Addendum

** In some sense complex numbers

are more fundamental than real numbers. The extra mathematical structure

gives rise to theorems like Cauchy's integral formula, which demonstrates

an interplay between local and global (topological) properties of a manifold

and this has been exploited to great effect in twistor theory. [This could

have relevance to the non-locality involved in the actual collapse of

a wavefunction or the fact that the negative energy stored in an gravitational

field is also non local] There are also local isomorphisms between space-time

groups and those groups associated with complex spaces (the latter, as

in the case of spinor space, is simply connected, while the rotational

group of Euclidean geometry is doubly connected). Indeed quantum

mechanics demands a complex space (that of spinors) in order for it to

be compatible with SR, this being part and parcel of quantum field

theory. Now a spin n/2 field, which is represented by a symmetrical spinor

with n indices, can be associated (in Projective Twistor

space), with a twistor function f{-n-2} of homogeneity {-n-2} via a contour

integral. One of the most natural ways in which complex analysis and contour

integral techniques can be developed, is to consider sheaf theory and

sheaf cohomology, which takes a fundamental role in twistor theory. More

precisely, zero rest mass fields on space-time, which are originally described

as real spinor fields satisfying field equations, can be expressed as

arbitrary holomorphic functions of a twistor (with contour), and these

can then be further interpreted as elements of a (first) sheaf cohomology

group. [Historically, it was the study of sheaf theory, which originally

gave rise to CATEGORY theory, in an attempt to determine which given sheafs

are equivalent. For example, a presheaf of abelian groups over a topological

space X, is a contravariant functor on the category of open subsets of

X]

**The cosmological constant Lambda acts

like a (positive) vacuum energy density but is associated with a negative

pressure because;

1. The vacuum energy density must be constant because there is nothing

for it to depend upon.

2. If a piston capping a cylinder of vacuum, is pulled out producing

more vacuum, the vacuum within the cylinder has more energy, which must

have been supplied by the force pulling on the piston.

3. If the vacuum is trying to pull the piston back into the cylinder,

it must have a negative pressure, since a positive pressure would tend

to push the piston out.

If we denote the ratio of the vacuum density/critical density as Kappa

and the ratio of the actual matter density /critical density as Omega,

then obviously the Universe is open if Omega + Kappa is less than unity,

closed if it is greater than unity and flat if it is exactly one. Now

if Kappa is greater than unity then the Universe will expand forever unless

Omega is much larger than current observations suggest. For Kappa greater

than zero even a closed Universe can expand forever (e.g if the

density of matter is as small as we currently observe).

MORE DETAILS REGARDING INFLATION

The electroweak Higgs field, is responsible

for spontaneous symmetry breaking and gives mass to particles such as

the W and Z particle. In supersymmetry theory it is believed to be responsible

for giving mass to all the particles, depending on how strong it couples

to each of them. The Grand Unified Higgs mechanism may also explain the

nature of the rapid inflation experienced during the earliest epoch of

the universe, since its initially high energy value can be associated

with a negative pressure which drives the expansion. When the energy of

the vacuum drops to zero (being converted into matter and radiation),

the Higgs field takes on a non zero value, which breaks the symmetry of

the known interactions by giving mass to the particles and their force

carrying bosons. This inflation field solves the cosmological problems

of flatness, monopole scarcity and the horizon problem. and was derived

from a consideration of the adding Einstein’s field equation to GUT.

During this inflationary epoch which lasted for only a billionth of a

a billionth of a a billionth of a second a region the size of a DNA molecule

would have expanded to the size of the Milky Way galaxy - - a greater

expansion than that that has occurred during the remaining 13.7 billion

years of the universe. Only by ’vaporizing the vacuum’ by returning

it to a temperature high enough to evaporate the Higgs field (causing

it to have a zero average value throughout space), would the full symmetry/unification

become apparent again.

According to theory, matter and radiation are produced at the end of

inflation and however, whereas these decrease in energy as the universe

expands, the inflationary field actually increases its energy (sapping

energy from the expansion like a rubber sheet that is expanding), since

it initially has a constant density as it expands. As the universe expands,

matter and radiation lose energy to gravity, while an inflation field

gains energy from 'repulsive' gravity. In this way we can account for

the large amount of matter that is present in the universe today, by just

considering a small amount of matter (~20 pounds) that originated in a

tiny space (10^-26cm diameter), as the inflationary phase grew to a close.

This is because at the onset of inflation, the field didn't need to have

much energy, since the enormous expansion it was about to sporn would

enormously amplify the energy it carried. [This is in contrast with the

standard big bang model in which the initial energy content of the universe

would need to be enormous, due to its high energy content] hence inflationary

cosmology, by 'mining' gravity can produce all the ordinary matter and

radiation that we see today from a tiny speck of inflationary filled space.

Note also that small quantum fluctuations in the early inflationary Higgs

field arise due to Heisenbergs uncertainty principle and these can explain

the seeding of the universe with inhomogeneities that coalesced

to form present day galaxies. This theory is in close agreement with recent

analysis of Cosmic Background Radiation.

Inflation also rescues the problem regarding why there is a low entropy

in the early universe, which gives rise to 'the arrow of time'. During

the inflation epoch the residing field made gravity repulsive, which meant

that any clumpiness in the fabric of the cosmos (due to Heisenberg's UP),

was expanded so rapidly and to such an extent, that it these warps became

stretched quite smooth. Also because of this rapid expansion of space

the density of matter also became very diluted. This is in direct contrast

to the post inflationary period where the attractive force of gravity

would tend to cause the creases of space and clumping of matter to increase.

At the end of the inflationary period, the universe would have grown enormously

removing most of the nonconformitiey, and as the field reached the bottom

of the potential energy well, it converted its pent up energy into a nearly

uniform bath of particles. Hence this smooth uniform spatial expansion

of matter that results in the inflation model explains why the universe

started with a relatively low entropy state. In actual fact the overall

entropy increases during inflation (in keeping with the second law of

thermodynamics), but much less than it might have been, since the smoothing

has reduced the gravitational contribution to entropy (uniformity and

reduced clumping means less entropy -- black holes being the most extreme

case of clumping having the greatest entropy). When the inflationary field

relinquished its energy it is estimated to have created 10^80 particles

of matter and radiation and this would correspond to an enormous increase

in entropy. So even though the gravitational entropy went down this was

more than compensated by the creation of more particle states in accordance

with the second law of thermodynamics. The important thing is however

that inflationary expansion/smoothing of the universe created a huge gap

between what the entropy contribution of the universe was and might have

been, thus producing a low entropy in the early universe. This then set

the stage for a billion years of gravitational collapse (increasing entropy),

whose effects produced the seeding of galaxies and the formation of stars

that we continue to witness, as the arrow of time moves forward.

In the above considerations it is necessary to assume that prior to

inflation there was a typical high entropy space which was riddled with

warps and that the inflationary field was highly disordered with its value

jumping around due to quantum uncertainties. [Remember that the UP relates

to the complementary uncertainties in a particles position and momentum

(~ rate of change of positioned) and when applied to fields, it implies

that the more we know about the value of a field (number of particles

at a location),.the less we know about its rate of change at that location].

Hence we can expect that sooner or later a chance fluctuation within this

turbulent highly energetic preinflationary period, will cause the value

of its field to jump to the correct value in some minutel region of space,

and in so doing initiate an outward burst of inflationary expansion. Now

this understanding is actually close to Boltzmann's suggestion, that what

we now see is a result of a rare but every so often expected fluctuation

from total disorder. The advantage here however, is that only a small

fluctuation within a tiny space during the inflationary period, is necessary

to yield a huge and highly ordered universe that we now observe. A jump

to lower entropy within a tiny space was leveraged by inflationary expansion

into the vast reaches of the cosmos. Thus inflation doesn't just explain

the horizon problem and the dearth of magnetic monopoles, it also accounts

for the low entropy of the early universe (arrow of time) the flatness

of space and the slight inhomogeneities from which galaxies formed. Indeed

it is also conjectured that such a sprouting of an inflationary universe

could be common enough to occurred elsewhere during the chaotic primordial

state of high entropy and may continue to do so, repeatedly sprouting

new universes from older ones, creating a multiverse (each maybe with

different subsequent laws of physics which evolved at various symmetry

breaking phases). Note that in order to artificial create conditions for

such a universe, we would have to cram about 20 pounds of inflationary

field into a space of about 10^-78 centimeters cubed which means a density

of 10^67 times that of an atomic nucleus.

In summary, through a chance but every so often expected fluctuation

of a chaotic (high entropy) primordial state, a tiny region of space achieved

conditions that lead to a brief burst of inflationary expansion. The enormous

rapid expansion resulted in space being stretched tremendously large and

extremely smooth. As this burst drew to an end, the inflationary field

relinquished its huge amplified energy by filling space nearly uniformly

with matter and radiation. As the inflation's repulsive gravity diminished,

ordinary attractive gravity became dominant. Gravity exploits tiny inhomogeneities

caused by quantum fluctuations that are inherent in Heisenberg's UP, and

causes matter to clump, forming galaxies and stars and ultimately leading

to the formation of solar systems such as our own. After about 7 billion

years repulsive gravity once again became dominant but this is only relevant

on largest of cosmic scales and the nature of this dark energy and indeed

the known dark mater is as yet unknown.

Footnote: . . There are still many fine tuning problems with inflation,

and as such inflationary cosmology is not a unique theory and many different

versions have been proposed. These include ; Old inflation, new inflation,

warm inflation, hybrid inflation, hyper inflation, assisted inflation,

eternal inflation, extended inflation, chaotic inflation, double inflation,

weak inflation, hypernatural inflation

**The historic sequence for this is as

follows;

Hawkings originally showed that the area of a black hole can never decrease

in time, suggesting that it is analogous to entropy. Bekenstein argued

that this relationship was more than just an an analogy and that a black

hole has an actual entropy that is proportional to its area. However entropy

is related to temperature (~S = ~Q/T)and this was not a property that

was associated to black holes at that time. Hawkings then went on to discover

that a black hole emits radiation and can be given a temperature that

is inversely proportional to its mass (and the mass of a black hole directly

determines its event horizon). Hence the entropy of a black hole can be

shown to be S =1A/4hG and when object fall into a black hole the entropy

of the surrounding universe decreases (since negentropy is a measure of

information which is reduced when objects disappear,) while that of the

black hole increases so as to maintain/increase the overall value. On

the other hand if the black hole radiates energy, it loses surface area

and hence entropy but the entropy of the outside world will increase to

make up for it. However the entropy that is removed when a highly organized

system is dropped into a black hole, is much less that the increase of

entropy that is returned, when the black hole radiates that amount of

mass back to the universe, thus implying an overall increase in entropy,

in keeping with the second law of thermodynamics. Bekenstein later went

on to assert that the amount of information that can be contained in a

region is not only finite but is proportional to the area bounded

by that region, measured in Planck units (Holographic principle) and this

implies that the universe must be discrete on the Planck scale. This Bekenstein

Bound is partly a consequence of GR and the 2nd law of Thermodynamics,

but the argument can be turned around, and it can be shown that assuming

the 2nd law and the Bekenstein Bound, it is possible to derive GR. Hence

we have 3 approaches to combining GR with QT viz String theory, LQG, and

black hole thermodynamics and each of these indicate (in differing ways)

that space and time are discrete (the last two are also relational based).

More recently, new difficulties have become evident, from the fact that

when (organized) objects drop into a black hole, their quantum wave functions

are in a pure (correlated) state, while when the Black Hole eventually

evaporates, the radiation is in a mixed quantum state (i.e. the individual

quanta are not correlated as in an assembly of bosons or fermions). Now

a pure state cannot evolve into a mixed state by means of

a unitary transformation, which is a problem since unitary transformations

are a crucial feature of all quantum wavefunctions (in order that probabilities

evolve in a correct manner). Hence we need to find a way of reconciling

this dilemma, perhaps by invoking a non unitary theory or by discovering

a way of accounting for the extra information that a pure state has in

comparison to a mixed state. If the correlations between the inside and

outside of the black hole are not restored during the evaporation process,

then by the time that the black hole has evaporated completely, an initial

pure state will have evolved to a mixed state, i.e., "information"

will have been lost. For this reason, the issue of whether a pure state

can evolve to a mixed state in the process of black hole formation and

evaporation is usually referred to as the "black hole information

paradox". [There are in fact two logically independent grounds for

the claim that the evolution of an initial pure state to a final mixed

state is in confict with quantum mechanics:

1. Such evolution is asserted to be incompatible with the fundamental

principles of quantum theory, which postulates a unitary time evolution

of a state vector in a Hilbert space.

2. Such evolution necessarily gives rise to violations of causality and/or

energy-momentum conservation and, if it occurred in the black hole formation

and evaporation process, there would be large violations of causality

and/or energy-momentum (via processes involving virtual black holes) in

ordinary laboratory physics.

Some advocate that a black hole cannot completely disappear but that

some of the original information persists as a kind of nugget. Others

believe that this information is re-emitted as some form of correlated

particles. Another view is that a new quantum theory of gravity will necessarily

be non unitary. Hawking has changed his original view that information

is lost and now advocate that the actual probability of sub atomic(and

virtual) black holes actually causing the loss of information is minuscule

and that unitarity is only violated in a mild sense. [He proposes that

in the presence of black holes, the quantum state of a system evolves

into a (non-pure state) density matrix --- a la von Neumann] This is somewhat

analogous to the improbable effect in the violation of the 2nd

law of thermodynamics or the approach to decoherence in QT. In effect

the sum over all the possible histories (geometries) of the universe,

results in the nullification of the non unitary effect of black holes

in the long term. Susskind on the other hand has applied t' Hooft's holographic

principle to sting theory and believes that the information is stored

in the horizon of a black hole [Indeed using string theory to calculate

the possible configurations of black holes has reproduced the BH formula

for its entropy -- as have LQG calculations]. This has been strengthened

more recently by Maldacinas conjecture (AdS/CFT correspondence), which

demonstrates that there is an equivalence between the string theory of

gravity in a 5D anti-de Sitter universe and the conformal supersymmetric

Yang- Mills theory on its horizon [This holographic duality becomes more

precise the larger the value of supersymmetry N]. Maldacina's conjecture

not only says that gravity is in some deep way the same as quantum field

theory but also implements the holographic principle in a concrete way.

Proof of Bekenstein Bound

Assume the contrary, that there is an entity 'A' such that the amount

of information needed to describe it, is much larger than its surface

area. Now 'A' cannot be a black hole because we know that the entropy

of a black holes that can fit inside 'A' must be equivalent to an area

less than that of 'A' but in this case its entropy must be lower than

the area of the screen in Planck units. If we assume that the entropy

of the black hole counts the number of its possible quantum states, this

is much less than the information contained in 'A'. Now 'A', according

to GR has less energy than a black hole that just fits inside 'A' and

so as we add energy to 'A' we reach a point at which it will collapse

into a black hole. However it now has an entropy which is equal to a quarter

of the area of 'A'. Since this is lower than the entropy of the original

'A' we have succeeded in lowering the entropy of a system, which contradicts

the second law of thermodynamics! Consequently if we accept the 2nd law

of thermodynamics we must believe that the most entropy that we can attribute

to 'A' is a quarter of its area

Bekenstein's law. With every horizon that forms a boundary separating

an observer from a region which is hidden from them, there is associated

an entropy which measures the amount of information which is hidden behind

it (and is proportional to the area of the horizon)

Unruh's law. Accelerating observers see themselves as embedded

in a gas of hot photons (Rindler particles) at a temperature proportional

to their acceleration. {As their acceleration increases they perceive

more virtual radiation. Now although this radiation is (non-locally) correlated,

their observable horizon shrinks. This is because light from a sufficientl

distant source can never catch up with a continually accelerating observer,

even though they can never reach the speed of light -- the greater the

acceleration the nearer is this horizon . Hence because much of their

world cannot be seen, this produces a randomness which implies an increased

temperature. This is in accordance with the principle of equivalence;

for both black holes and accelerating observers, the smaller the 'horizon'

the greater the random radiation (temperature). However in the case of

the former this area is the region into which information has fallen and

increases with entropy, while in the later, the visible region that surrounds

them reduces with increased acceleration, meaning that the universe of

information that is inaccessible increases. In both instances,

there is an increased temperature and decreased entropy, as the horizon

is reduced . . . deltaS = deltaQ/T }

* * * * * * * *

NB. . The entropy of a black hole is proportional to the area of its

event horizon and has an enormous value. [Entropy can be regarded as a

measure of how probability that system is to come about by chance] Now

because of the large black holes at the centres of galaxies, this black

hole entropy easily represents the major contribution to the entropy of

the known universe! Because of the second law of thermodynamics, the entropy

at the beginning of the universe must be very small. This beginning is

represented by the singularity of the big bang, which must be extraordinarily

special or fine tuned compared to the high entropy singularities that

lie at the centre of black holes. It therefore requires a new theory --

quantum gravity -- to explain the remarkable time asymmetry between the

singularities in black holes and that at the big bang.

Fermions and Bosons;

The Janus of Quantum Theory

In Newtonian mechanics objects are described classically as particles

in which the position and momentum at a particular instant can be specified

exactly. A collection of particles in thermal equilibrium is described

in terms of Boltzmann statistics, in which particles are distinguishable.

In quantum mechanics a 'particle' is described by a wavefunction which

is acted upon by hermitian operators which extracts eigenvalues that represent

measurable observables. Such observables as momentum and position although

complimentary are also mutually exclusive (as enshrined by Heisenberg’s

uncertainty principle). A wavefunction does however have an ‘internal’

structure known as a spinor which is crucial in determining how the particle

behaves. A collection of such quanta is described either by Einstein-Bose

statistics (in the case of integer spin bosons) or by Fermi-Dirac statistics

(half odd-integer spin fermions), both of which involve ‘particles’

that are not distinguishable. It was in fact Dirac who first introduced

the concept of spinors into QT as a means of making Schroedingers equation

compatible with SR (such quanta obeyed the spin statistics of an assembly

of fermions).

Hence in QT all entities can be classified according to whether they

obey such fermionic statistics (with its anti-symmetric wavefunction)

or bosonic (symmetric) statistics. There is a whole lot of group theory

which shows that half-integer spins obey the former (e.g. those whose

angular momentum is 1/2h). This in turn means that 2 fermions cannot occupy

the same quantum state (Pauli’s Exclusion Principle), an important

fact since it explains the stability of the electron configurations in

an atom and hence allows the richness of the periodic table. Bosons on

the other hand like to be in the same quantum state and the fact that

they can be stimulated to do so is exploited by the 'Light Amplification

by Stimulated Emission of Radiation' (LASER). Super cooled helium-4 also

obeys these statistics (in this case bosons are conserved) and thus allows

the production of an Einstein-Bose condensate, the so- called fourth state

of matter (what ever happened to plasma?).Whole integer spinors (bosons)

faithfully represent the rotational group (they behave like the squares

in a Rubic cube) while fermions exhibit a non-faithful representation,

hence if you rotate an electron by one revolution it ends up being 'upside-down'.[

This property is responsible for its 'anti-social' behaviour, since it

gives rise to the anti-symmetric wavefunction that characterises an assembly

of fermions.]

Let us take another look at the origin of this double-headed aspect of

QT. A physical law needs to be invariant under rotation, hence we need

to know how to express a transformation, so as to maintain the symmetry

of an equation, with respect to all observers who are oriented in different

directions. Wavefunctions (state vectors) exist in Hilbert space which

involve complex numbers and undergo unitary transformations in

order that probabilities are conserved. The special unitary group SU(2)

achieves this and acts on the 2-dimensional space of spinors (so called

because it represents spin states). When the XYZ axes are rotated by an

angle @ (e.g. 360) the spinor rotates by only @ /2 (i.e.180). Hence we

have two important representations of the rotational groups viz. O(3)

acting on real vectors in Euclidean 3-D space and SU(2) acting on complex

space. The later is actually simpler (simply connected) while the latter

is doubly connected.

Wavefunctions must transform under this (compact) unitary group in order

to preserve transition amplitudes however relativity transformations involves

a non-compact Lie group. For simple rotational transformations this can

be achieved as already mentioned, by using a spinor representation,

which under the action of the Lorentz group, undergoes a unimodular

transformation and under the restriction to the rotational sub-group,

it also undergoes a unitary change. The Pauli matrices generate such a

special unitary group SU(2). Hence if an observer’s axes are rotated,

the group that transforms this wavefunction must be both unimodular

and unitary. This requires using spinor space not vector space

i.e. as vector co-ordinates go through an Euclidean rotation, the spinors

wavefunction evolves in a unitary manner.

This spinor space is not however a faithful representation of the rotational

group and leads to the result that 2 rotations are needed to make the

spinor function return to its original state and it is this which is the

cause of the Lande’ factor g = 2. [One rotation induces a negative